In the race to accelerate development, Enterprise Application Code Generators have emerged as powerful allies, promising to boost developer productivity and slash time-to-market. Yet, despite their allure, many organizations find these sophisticated AI tools falling short, not because they lack advanced features, but due to fundamental misalignments in their deployment architecture, security, and context understanding within complex enterprise environments. The promise is real, but unlocking it requires a deeper dive than a feature checklist alone can offer.

At a Glance: Key Takeaways for Enterprise AI Code Generators

- Deployment Architecture is Paramount: For regulated industries, how and where your AI code generator is deployed (cloud, on-premises, air-gapped) matters more than its feature set. Security compliance is often the make-or-break factor.

- Context is King, Especially for Multi-Repo: Enterprise codebases spanning hundreds of repositories are a massive challenge. Look beyond raw token window size; the maturity of Retrieval-Augmented Generation (RAG) and pre-indexing capabilities are crucial for handling complex, cross-repository context.

- Security Compliance is Non-Negotiable: AI refinement can inadvertently exacerbate security vulnerabilities. Prioritize tools with robust certifications (SOC 2, ISO/IEC 27001, ISO/IEC 42001) and integrate systematic human code review processes.

- Proof-of-Concept is Essential: Don't commit without thorough testing. Validate a solution's context accuracy, security alignment, and total cost of ownership against your specific multi-repository codebase.

- Emerging Leaders Offer Deeper Context: Solutions like Augment Code and Sourcegraph Cody are pushing the boundaries of multi-repository context understanding, crucial for truly transforming enterprise development.

The Unseen Iceberg: Why Enterprise AI Code Generators Often Sink (Beyond Features)

You've heard the buzz: AI generating code, writing tests, even refactoring entire modules. It sounds like a dream for any enterprise grappling with technical debt and tight deadlines. But the reality for many organizations, especially those in regulated industries, often diverges sharply from the marketing hype. The culprit isn't usually a lack of ambition or advanced AI models; it's a fundamental misunderstanding of the unique challenges enterprise environments present, particularly around deployment architecture and context.

Think of it this way: a brilliant architect can design a stunning skyscraper, but if the foundation isn't suited to the soil, the building will eventually crack. Similarly, an AI code generator, no matter how intelligent, will fail if its deployment architecture doesn't align with your organizational security, compliance, and infrastructure realities.

The Multi-Repository Context Conundrum: When One File Isn't Enough

Most AI code generation tools gained initial traction by excelling at single-file or small-project contexts. They could see your current file, perhaps a few related ones, and suggest completions or generate functions. This works beautifully for isolated tasks.

However, enterprise codebases are rarely so neat. We're talking about sprawling ecosystems: 50, 100, sometimes 500+ repositories. Millions of lines of code distributed across numerous microservices, legacy systems, and shared libraries. A change in one service might impact dozens of others. For an AI to be truly useful here, it needs to understand this entire interconnected web, not just the file you're currently editing.

Traditional AI models, like early GitHub Copilot with its modest 2,048-token context (~150 lines), struggled immensely with this. While the current standard of 64,000 tokens is a significant leap, it still pales in comparison to the sheer volume of code in a typical enterprise. Solutions that truly shine, like Augment Code, are processing 400,000-500,000 files across multiple repositories. Sourcegraph Cody tackles this through sophisticated pre-indexing with vector embeddings, allowing it to understand up to 100,000 lines of related code for monorepo-scale queries. The takeaway? For complex enterprise scenarios, the maturity of RAG (Retrieval-Augmented Generation) implementation is often far more critical than a raw, theoretical context window size.

The Security Tightrope: AI Refinement and Unseen Vulnerabilities

Another critical oversight is security. While AI can improve code efficiency, it can also, paradoxically, introduce or exacerbate vulnerabilities. When AI refines code, it might prioritize functionality or brevity over robust security practices, potentially bypassing established checks or introducing subtle flaws that human reviewers might miss.

For regulated industries, where security compliance (think SOC 2, ISO/IEC 27001, CMMC) is not just a best practice but a legal mandate, this risk is magnified. Conventional evaluations of AI tools frequently miss these security implications. The solution isn't to distrust AI entirely, but to approach its integration with a "trust but verify" mindset. This means prioritizing tools with robust security certifications, ensuring data sovereignty, and, crucially, maintaining systematic human code reviews to validate AI-generated output against your organization's security policies. Deployment architecture, dictating where your code and AI models reside, becomes the first line of defense here.

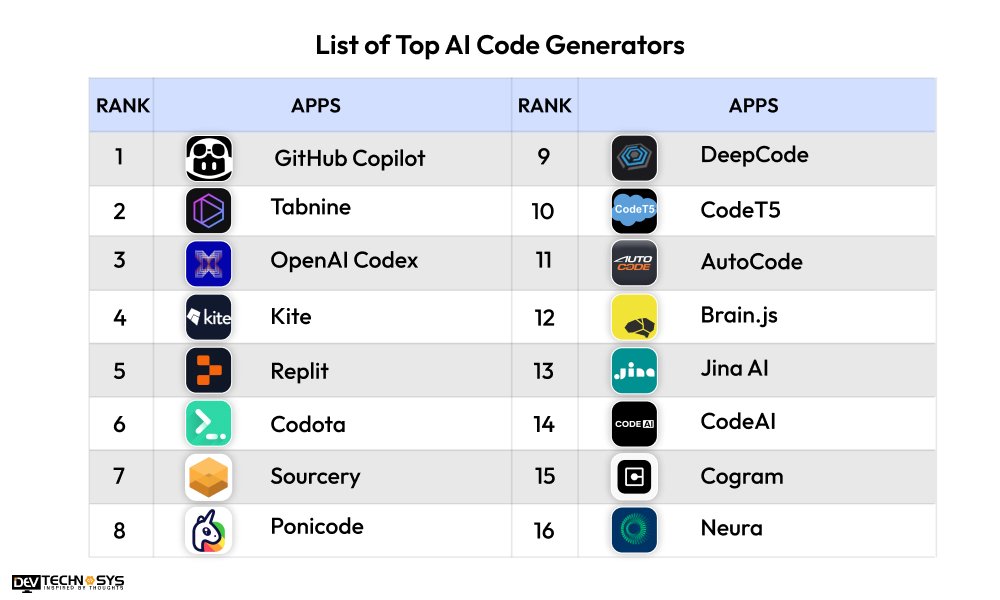

Navigating the Labyrinth: A Look at Leading Enterprise AI Code Generators for 2025

Choosing the right AI code generator isn't a one-size-fits-all decision. Your specific deployment needs, codebase complexity, and compliance requirements will dictate the best fit. Here, we delve into six leading enterprise solutions, outlining their strengths, weaknesses, and ideal use cases.

1. GitHub Copilot Enterprise

What it is: Microsoft's formidable AI coding assistant, deeply embedded within the GitHub ecosystem. It's designed to seamlessly integrate into existing GitHub workflows, providing AI assistance across coding, documentation, and pull requests.

- Core Facts: Offers a compelling 376% ROI over three years (according to Forrester research). Boasts superior performance for popular languages like Python, JavaScript, TypeScript, Ruby, Go, C#, and C++. Provides unlimited repository support without separate indexing, benefiting from GitHub's inherent understanding of its hosted code. Comes with IP indemnity from Microsoft, a significant comfort for enterprises concerned about legal exposure. Powered by advanced LLMs such as GPT-5 and GPT-4.1, ensuring cutting-edge generative capabilities. Also features enterprise security controls, including SOC 2 Type II and ISO/IEC 27001:2013 certifications.

- Implementation: Configure settings via your GitHub organization, establish granular policies for usage, deploy the necessary IDE extensions for your development teams, and monitor adoption and impact.

- Infrastructure: Requires GitHub Enterprise Cloud ($21/user/month for core services) with either the Copilot Business ($19/user/month) or Enterprise ($39/user/month) add-ons. It's a cloud-only deployment, leveraging Microsoft's Azure infrastructure.

- When to Choose: Organizations deeply invested in GitHub-native development workflows. Teams where cloud-acceptable security is sufficient for their compliance posture. Development teams primarily using the supported languages for which Copilot has proven superior performance.

- When NOT to Choose: If your organization has strict on-premises or air-gapped deployment requirements. For multi-repository contexts that regularly push beyond documented context windows, as its effectiveness might diminish compared to specialized RAG solutions. Financial services or other highly regulated industries needing all data processing to remain strictly within customer-managed infrastructure.

2. Sourcegraph Cody Enterprise

What it is: An enterprise-focused AI assistant built from the ground up to understand massive codebases. Cody leverages sophisticated pre-indexing and Retrieval-Augmented Generation (RAG) to provide highly relevant AI assistance across large, complex code repositories.

- Core Facts: Excels in large codebase understanding through pre-indexing entire repositories with vector embeddings, capable of serving up to 100,000 lines of related code per query. Sourcegraph is actively testing 1 million contexts with Google's Gemini 1.5 Flash, indicating a strong commitment to expanding context capabilities. Offers robust security with SOC 2 Type II and ISO/IEC 27001:2022 certifications. Provides flexible deployment options: cloud, self-hosted, and VPC, catering to diverse security and infrastructure needs.

- Implementation: Requires installation of a Sourcegraph instance (can be cloud or self-hosted), configuration of repository indexing (a crucial step for deep context), deployment of Cody extensions to developer IDEs, and monitoring of context retrieval accuracy.

- Infrastructure: For self-hosted deployments, a minimum of 16GB RAM is recommended, typically deployed on a Kubernetes cluster. Cloud deployment options are also available. Be aware that initial pre-indexing for very large repositories can take 24-48 hours.

- When to Choose: Organizations managing truly large codebases, especially those with 50 or more distinct repositories. Enterprises with stringent security requirements that demand either self-hosted, VPC, or highly certified cloud solutions. Development teams that frequently need cross-repository context for complex tasks and benefit from pre-indexed vector embeddings.

- When NOT to Choose: Smaller repositories with fewer than 10,000 files, as the overhead of Sourcegraph's indexing might be excessive. Teams requiring instant deployment and immediate utility (due to the potential 24-48 hour pre-indexing delay for large codebases). Organizations without existing Kubernetes expertise or infrastructure for self-hosted deployments.

3. Tabnine Enterprise

What it is: A versatile AI assistant known for its flexible deployment options, allowing organizations to keep their code entirely local, on-premises, or even air-gapped. This hybrid approach offers unparalleled data sovereignty.

- Core Facts: Offers comprehensive deployment flexibility: cloud, VPC, on-premises, and unique air-gapped options, ensuring complete data sovereignty. Holds SOC 2 Type II compliance, addressing critical enterprise security needs. Employs a hybrid architecture, using local models for basic suggestions and leveraging cloud models for more advanced capabilities, balancing performance and data control. Its RAG architecture consistently outperforms fine-tuning for complex enterprise codebases, providing more relevant and accurate suggestions.

- Implementation: The process involves choosing the optimal deployment architecture (e.g., air-gapped), installing the Tabnine Enterprise server within your chosen environment, configuring authentication and user access, and deploying client extensions to developer IDEs.

- Infrastructure: For on-premises deployments, expect minimum requirements of 32GB RAM, 8 CPU cores, and 500GB storage. Air-gapped deployments entail the complete deployment of AI models within your customer network, ensuring no external data egress.

- When to Choose: Organizations with extreme security or regulatory compliance needs, such as those requiring air-gapped environments for national security, defense contractors needing CMMC Level 2+ compliance, or financial services demanding absolute data sovereignty.

- When NOT to Choose: Teams prioritizing access to the very latest, frequently updated AI models (air-gapped deployments can limit the frequency of model updates). Small organizations with fewer than 25 developers, as the complexity and overhead of on-premises or air-gapped deployment might be disproportionate to the team size. Budget-sensitive projects where the cost and resources for a complex, self-managed deployment are prohibitive.

4. Augment Code

What it is: An advanced enterprise AI coding assistant celebrated for its robust large-scale codebase handling, industry-leading AI governance certification, and capabilities for autonomous coding, even across multi-repository architectures.

- Core Facts: Boasts one of the largest documented context engines, capable of understanding 400,000 to 500,000 file contexts via selective retrieval mechanisms, making it exceptional for vast, multi-repository environments. It's the first in its category to achieve ISO/IEC 42001 AI governance certification, a testament to its commitment to responsible AI. Compliant with SOC 2 Type II and offers flexible deployment options, including VPC and on-premises, ensuring data control.

- Implementation: Starts with configuring an organization account, followed by installing IDE extensions (VS Code, JetBrains IDEs) and potentially a CLI tool. A key feature to set up is Context Lineage tracking, which helps monitor how the AI processes and understands your codebase. Usage is credit-based, so monitoring credit consumption is also vital.

- Infrastructure: Pricing is credit-based, ranging from approximately $20 to $200 per month depending on usage. Compatible with popular IDEs and offers VPC and on-premises deployment for enhanced security and control.

- When to Choose: Enterprise teams that genuinely need maximum context understanding across exceptionally large, multi-repository codebases. Organizations with a strong focus on AI governance and requiring ISO/IEC 42001 compliance. Development workflows that aim to incorporate autonomous coding capabilities.

- When NOT to Choose: Teams with highly unpredictable usage patterns, as the credit consumption model could lead to unpredictable costs. Newer market entrants or smaller organizations seeking extensive community support, as Augment Code is a specialized enterprise solution.

5. Cursor

What it is: A unique AI-native code editor built upon the familiar VS Code foundation. Cursor integrates AI capabilities directly into the editing experience, offering features like a composer mode for multi-file editing and a strong focus on privacy.

- Core Facts: Its AI capabilities are native to the IDE, providing a more integrated and fluid experience compared to extensions. Features a "composer mode" allowing developers to edit multiple files simultaneously with AI assistance, streamlining complex changes. Includes a privacy mode with configurable data retention policies, giving organizations control over sensitive code. Delivers sub-100ms response times for simple autocomplete, ensuring a snappy coding experience. Holds SOC 2 Type II certification.

- Implementation: The primary step is deploying the Cursor IDE itself. After installation, configure your Teams workspace, set privacy mode policies according to your organization's requirements, and monitor agent request usage, as this is a key metric for its Teams tier.

- Infrastructure: The Teams tier is priced at $40/user/month and includes 500 agent requests. Built on the highly extensible VS Code architecture, ensuring familiarity for many developers. It relies on cloud-based processing for its advanced AI features, but with robust privacy controls.

- When to Choose: Development teams looking for a fundamentally AI-native editor experience, particularly those who benefit from multi-file editing capabilities. Organizations with sensitive code that require a dedicated privacy mode and configurable data retention. Teams seeking a more integrated AI coding workflow within their editor.

- When NOT to Choose: Teams with a deep and specific investment in the existing VS Code extension ecosystem, as migrating might involve some disruption. Organizations with strict on-premises deployment requirements, as Cursor is primarily cloud-based. For security-critical applications where AI-generated code, despite its features, might introduce documented vulnerabilities that require careful scrutiny.

6. Amazon Q Developer

What it is: AWS's native AI coding assistant, purpose-built and optimized for cloud development within the Amazon ecosystem. It offers deep integration with AWS services, robust security scanning, and transparent reference tracking for open-source code.

- Core Facts: Uniquely optimized for AWS APIs, services, and cloud-native development patterns, making it ideal for AWS-centric organizations. Features built-in vulnerability detection for generated code, adding a crucial layer of security. Provides open-source code attribution, aiding in license compliance and managing intellectual property risks.

- Implementation: Enabled directly via the AWS console, requiring configuration of appropriate IAM roles for access control. Developers install IDE extensions for integration into their coding environment. Setup involves configuring security scanning and compliance monitoring within AWS.

- Infrastructure: Requires an active AWS account with the necessary IAM permissions. Seamlessly integrates with various AWS development services (e.g., CodeWhisperer, CodeCatalyst). Pricing is integrated into AWS billing, making it convenient for existing AWS customers. Exclusively a cloud-native AWS service.

- When to Choose: Development teams operating primarily within the AWS ecosystem. Organizations building cloud-native architectures that require deep integration with AWS services for maximum efficiency. Teams needing built-in security scanning for AI-generated code and robust open-source license attribution.

- When NOT to Choose: Non-AWS development environments, as its value proposition is heavily tied to the AWS ecosystem. Organizations with on-premises or air-gapped deployment requirements, as it is a cloud-only service. Multi-cloud strategies where deep AWS integration might lead to vendor lock-in.

Finding Your Fit: A Strategic Approach to Selecting a Code Generator

With the strengths and weaknesses of leading solutions laid bare, how do you make an informed decision for your enterprise? It boils down to aligning the AI tool's capabilities with your specific operational context, regulatory landscape, and technical requirements. This isn't just about features; it's about fit.

Prioritizing Your Non-Negotiables

Your journey starts by clearly identifying your organization's absolute requirements. Skimping here is a recipe for expensive disappointment.

- Air-Gapped or On-Premise Requirements? Embrace Sovereignty.

If your security posture, national regulations, or client contracts demand that no code or data leaves your internal network, solutions with robust on-premises, VPC, or, critically, air-gapped deployment options are your only viable path. This immediately narrows your field. - Choose: Tabnine Enterprise stands out here, offering true air-gapped deployment with complete data sovereignty. Augment Code also provides on-premises options.

- Avoid: Cloud-only solutions like GitHub Copilot Enterprise, Amazon Q Developer, and Cursor simply won't meet these stringent requirements.

- Cracking the Multi-Repository Code? Focus on Context Maturity.

For complex enterprise applications spread across dozens or hundreds of repositories, basic context windows fall short. You need an AI that truly understands the intricate relationships between files and services, not just isolated snippets. - Choose: Sourcegraph Cody Enterprise excels with its pre-indexing and vector embedding approach, efficiently handling up to 100,000 lines of related code. Augment Code pushes this even further with its advanced context engine processing 400,000-500,000 files via selective retrieval.

- Consider: GitHub Copilot provides unlimited repository support within its ecosystem, but its deep multi-repo context capabilities for highly complex, cross-service interactions may not match dedicated RAG solutions.

- Cloud-Native Dominance? Leverage Ecosystem Integration.

If your development primarily lives within a specific cloud provider's ecosystem, an AI tool deeply integrated into that environment can offer unparalleled efficiency and specialized features. - Choose: Amazon Q Developer is the clear winner for AWS-centric teams, offering deep integration with AWS services, APIs, and built-in security scanning for cloud-native development.

- Consider: GitHub Copilot Enterprise for organizations fully committed to the GitHub (and by extension, Azure) cloud.

- Unleashing Maximum Context? Scrutinize True Scope.

While advertised context window sizes are impressive, differentiate between theoretical capacity and practical application for real-time suggestions and code generation. For truly colossal codebases, you need an AI that can intelligently sift through vast amounts of information. - Choose: Augment Code boasts the largest documented context engine (400,000-500,000 file contexts), making it a prime candidate for organizations needing an expansive view.

- Actionable Tip: Always verify the actual context availability for different AI functions (e.g., autocomplete versus chat functions) during proof-of-concept testing.

- Meeting Regulatory Compliance? Certifications are Your Compass.

For any regulated industry, verifiable security and AI governance certifications are non-negotiable. These validate a provider's commitment to protecting your data and adhering to industry standards. - Choose: Prioritize tools with robust certifications. Augment Code leads with ISO/IEC 42001 AI governance certification, alongside SOC 2 Type II. Sourcegraph Cody Enterprise holds SOC 2 Type II and ISO/IEC 27001:2022. Tabnine Enterprise and GitHub Copilot Enterprise also offer SOC 2 Type II and ISO/IEC 27001 certifications.

- Pitfall: Never assume compliance; always request and review current certification reports.

Beyond the Hype: Actionable Insights for a Successful Rollout

Implementing Enterprise Application Code Generators is more than just installing a plugin; it's a strategic initiative that, when done right, can fundamentally transform your development lifecycle. Here are the core actionable insights to guide your journey.

Deployment Architecture Outweighs Feature Completeness

This cannot be stressed enough: for regulated industries, the deployment architecture of your AI code generator is the single most critical factor. An AI with fewer "bells and whistles" but that perfectly aligns with your security compliance (e.g., air-gapped, on-premises, or VPC within customer infrastructure) will always outperform a feature-rich, cloud-only solution that violates your regulatory boundaries. Ensure your chosen solution respects data sovereignty, residency, and access control requirements from day one.

Context Alignment Isn't Just a Buzzword; It's Your Reality Check

Your codebase's complexity is the ultimate litmus test for an AI code generator's effectiveness. If you have 50-500 repositories and millions of lines of code, an AI optimized for single-file contexts will be a frustration, not an aid. Evaluate the maturity of RAG implementations and pre-indexing capabilities over raw context window size. A tool that intelligently retrieves and synthesizes relevant code from across your entire codebase will deliver far greater value than one that merely boasts a large, but often underutilized, token window.

Systematically Address Security Vulnerabilities in AI-Generated Code

While AI tools like Amazon Q Developer offer built-in vulnerability detection, relying solely on AI output for security is a perilous gamble. AI refinement, especially at scale, can introduce subtle yet significant security flaws that are hard to detect. The most robust strategy involves:

- Prioritizing tools with strong security certifications (SOC 2, ISO/IEC 27001/42001).

- Integrating AI-generated code into your existing, rigorous human-led code review processes. Treat AI output as a powerful suggestion, not a canonical truth.

- Leveraging static analysis tools and security scanners to audit AI-generated code just as diligently as human-written code.

- Implementing strict context mechanisms to prevent sensitive data from inadvertently being exposed to or processed by external AI models.

Your Next Move: Recommended Steps for Implementation

The path to successfully leveraging Enterprise Application Code Generators is iterative and demands rigorous evaluation. Don't jump in blindly; take these measured steps to ensure a return on your investment.

- Conduct Proof-of-Concept (PoC) Testing with 3 Finalist Solutions: Select 2-3 solutions that best align with your initial requirements (especially deployment and compliance). Implement them with a representative sample of your organization-specific multi-repository codebases. This is crucial. Generic demos won't reveal the true performance within your unique technical debt and architectural complexities. Focus on real-world scenarios.

- Measure Context Accuracy, Security Compliance Alignment, and Total Cost of Ownership (TCO): During your PoC, establish clear metrics. How accurate are the suggestions for cross-repository changes? Does the solution truly adhere to your data sovereignty and security policies? Crucially, look beyond the per-user license. Factor in infrastructure costs (for self-hosted), potential data transfer fees, and especially usage-based overage charges common with credit models.

- Evaluate Augment Code Alongside Alternatives, Especially for Advanced Context and ISO/IEC 42001: Given its documented context handling capabilities across hundreds of thousands of files and its ISO/IEC 42001 AI governance certification, Augment Code merits serious consideration, particularly for enterprises with the most complex codebases and stringent governance needs. Pit it directly against competitors in your PoC to see how it performs under your specific load.

By systematically evaluating Enterprise Application Code Generators through the lens of deployment architecture, multi-repository context, and robust security practices, you can move beyond the hype and truly harness their power to transform your development landscape.